We help clients

build trust

Ghostwatch helps clients build trust and confidence. We are a leading provider of managed compliance and security services. Backed by industry-leading technology, our highly skilled team of experts delivers world-class service 24/7.

Our Clients

Managed Security

End-to-end security and compliance across your entire tech stack

GhostWatch includes all software, hardware, and services

Threat Intelligence

Connected to a global community of threat researchers

and security professionals.

Cloud and Multi-cloud

Our managed security services give you a complete and scalable security capability that is designed to detect and combat cyber attacks on your public cloud environment. In addition to on-premises servers, Ghostwatch supports public cloud installations including Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform. GhostWatch monitors all assets dispersed across several clouds and on-premises environments.

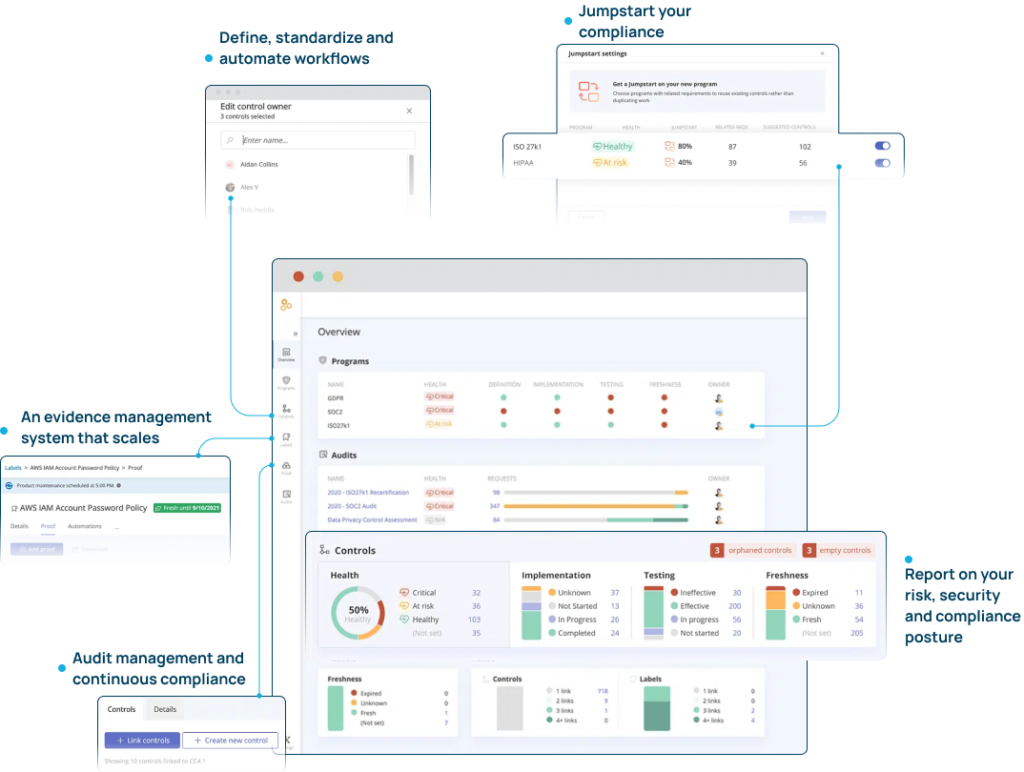

Managed Compliance

Full-Service Compliance

GhostWatch manages the entire compliance journey with real-time visibility at a reasonable cost.

Having performed hundreds of compliance assessments, GhostWatch has tremendous experience successfully guiding, managing, and maintaining compliance for our clients.

Supported Frameworks

• Over 72 industry frameworks and growing

• Automated updates to meet the latest regulations and industry standards

• Map controls to multiple regulatory standards, reducing time to compliance

• Custom frameworks on-demand: vendor compliance, data privacy, ESG, employee onboarding, cloud service providers

SOC 2, PCI DSS, ISO 27001, HIPAA, CSA STAR, GDPR, ISO 27701, HITRUST, Microsoft SSPA (DPR), FedRAMP, CIS, CCPA, NIST CSF, FISMA, 23 NYCRR 500

Integrate and Automate

Integrations

GhostWatch integrates with your tech stack, including cloud infrastructure, DevOps, security, and business applications so that compliance work can fit seamlessly into your existing business processes and workflows.

Why GhostWatch

Exceptional Security

• Safeguard your critical assets

• Advanced technology

• Deep technical expertise

• Regulatory compliance

Fast Deployment

• All-in-one solution; hardware, software, services

• Experienced deployment team

• Professionally managed

• Go-live in days not months

Affordable Solution

• Transparent pricing

• Fixed monthly fee

• Scalable

• 12-month term

24/7 Monitoring

• Always-on

• World-class security operations centers

• Multi-continent operations

• Rapid response